Sensor-based Task Oriented Grasp Synthesis

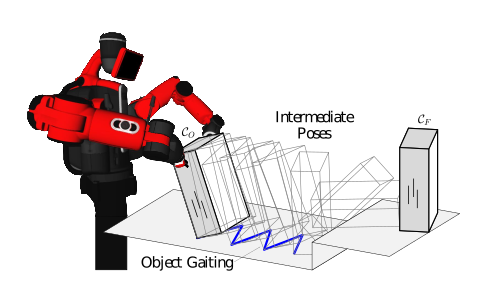

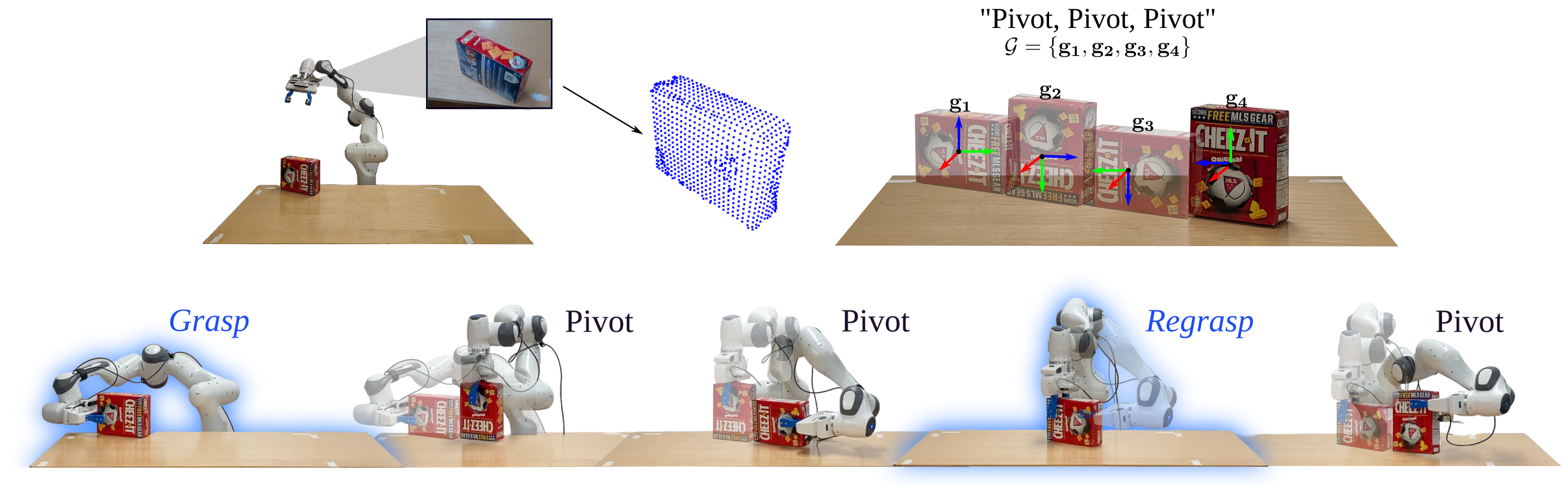

One of the key challenges in task-oriented grasp synthesis is to mathematically represent a task. In our work, we represent a task as a sequence of constant screw motions. Given a grasp (pair of antipodal contact locations) we can evaluate its feasibility for imparting the desired constant screw motion using our proposed task-dependent grasp metric. We have also developed a neural network-based approach which solves the inverse problem, i.e. given an object representation in terms of a partial point cloud, obtained from an RGBD sensor, and a task in terms of a screw axis, compute a good grasping region for the robot to grasp the object and impart the desired constant screw motion. This task representation also allows us to couple our approach for task-oriented grasp synthesis with screw geometry-based motion planners. For more details please visit the project page.  More recently, we have formalized the notion of regrasping in order to satify the motion constraints. Using our task-dependent grasp metric and a manipulation plan we are able to compute whether there is a need to regrasp an object while executing the manipulation plan or a single grasp would suffice.

More recently, we have formalized the notion of regrasping in order to satify the motion constraints. Using our task-dependent grasp metric and a manipulation plan we are able to compute whether there is a need to regrasp an object while executing the manipulation plan or a single grasp would suffice.

Representing complex manipulation tasks, like scooping and pouring, as a sequence of constant screw motions in SE(3) allows us to extract the task-related constraints on the end-effector’s motion from kinesthetic demonstrations and transfer them to newer instances of the same tasks. This approach has been evaluated for complex manipulation tasks like scooping and pouring and also in the context of vertical containerized farming for transplanting and harvesting leafy crops.

Representing complex manipulation tasks, like scooping and pouring, as a sequence of constant screw motions in SE(3) allows us to extract the task-related constraints on the end-effector’s motion from kinesthetic demonstrations and transfer them to newer instances of the same tasks. This approach has been evaluated for complex manipulation tasks like scooping and pouring and also in the context of vertical containerized farming for transplanting and harvesting leafy crops.

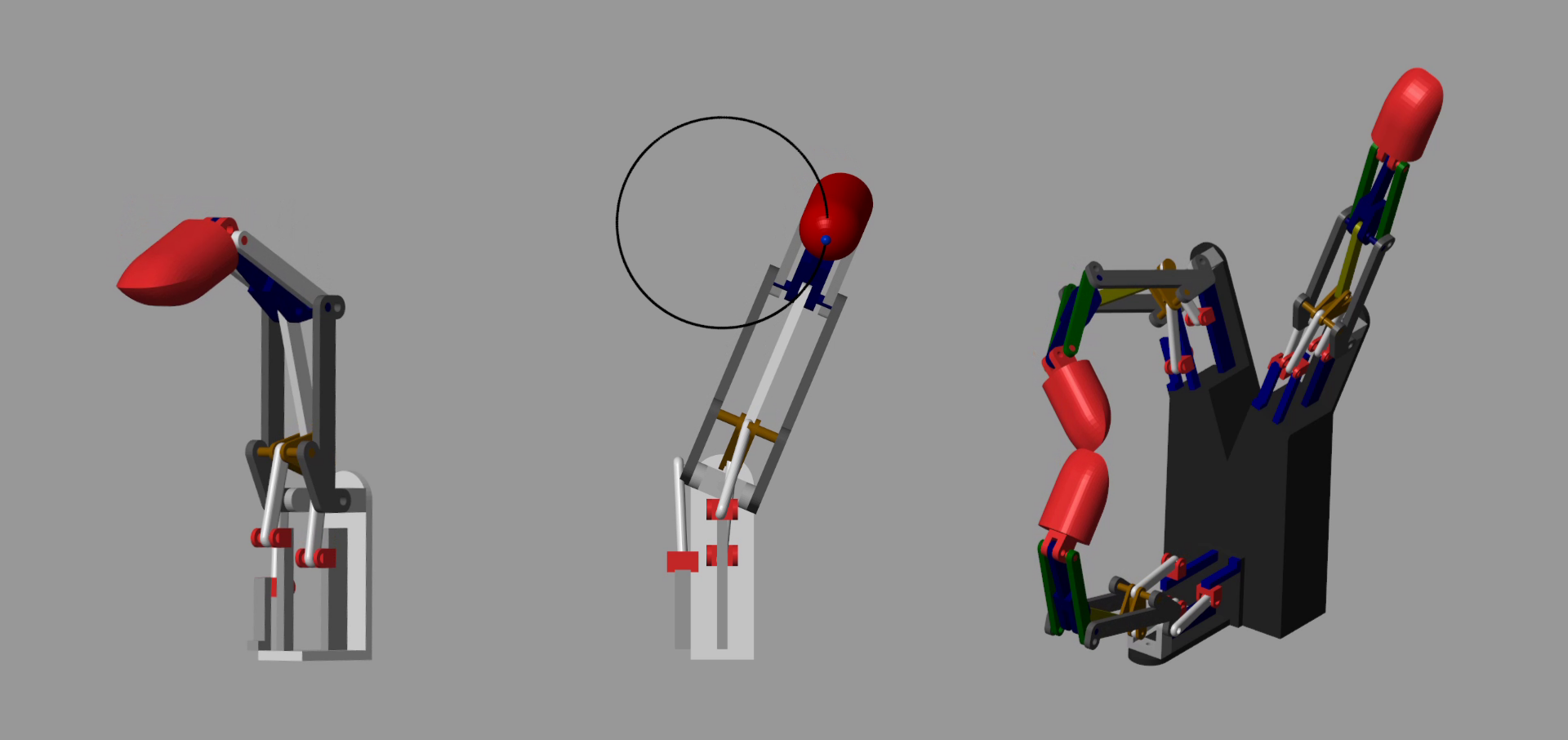

We have developed a novel 3-degree-of-freedom (3-DoF) series-parallel hybrid mechanism for a robotic finger that is capable of abduction/adduction and flexion/extension. We present the complete position kinematics and differential kinematics of our proposed finger mechanism and show through simulation examples that the fingertip can be kinematically controlled to follow a given path. Both position control and velocity control capabilities are demonstrated. For more details please refer to the papers and the

We have developed a novel 3-degree-of-freedom (3-DoF) series-parallel hybrid mechanism for a robotic finger that is capable of abduction/adduction and flexion/extension. We present the complete position kinematics and differential kinematics of our proposed finger mechanism and show through simulation examples that the fingertip can be kinematically controlled to follow a given path. Both position control and velocity control capabilities are demonstrated. For more details please refer to the papers and the